Computer Vision for Remote Sensing

Computer Vision for Remote Sensing

Remote Sensing satellites generate vast amounts of data over a wide range of the electromagnetic spectrum. The analysis of these data and the focused extraction of useful knowledge from them exceeds the capabilities of most traditional analysis methods. In the recent years, deep neural networks have shown promising results in learning to extract knowledge from remote sensing data.

In our lab, we are utilizing a range of state-of-the-art deep neural network architectures to solve different tasks like classification from images, regression, semantic segmentation, and object detection based on remote sensing data and addressing different research questions:

Climate Change

Climate change is one of the – if not the single – most pressing issue on a global scale. We are interested in quantifying the effects that amplify climate change. This effort includes the estimation of greenhouse gas emissions directly from remote imaging data and based on proxy data like detailed land use classification and traffic volume.

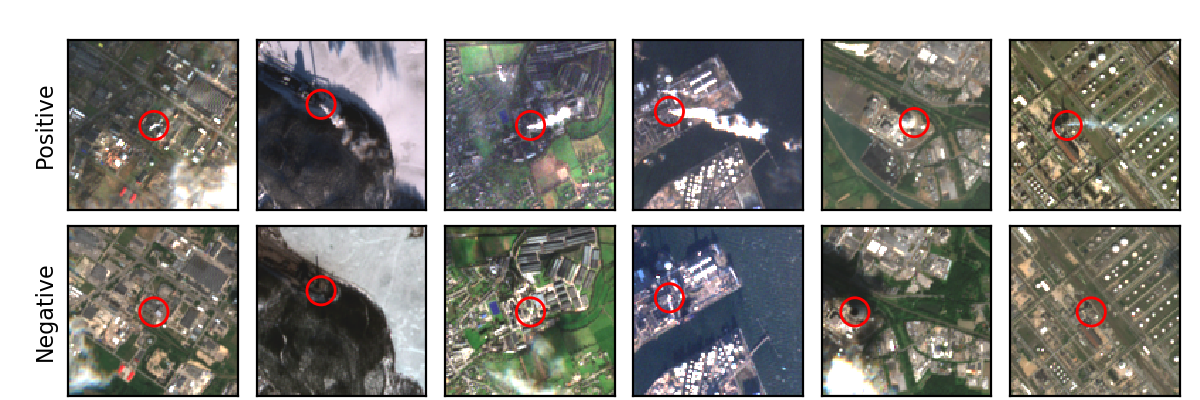

For instance, Mommert et al. (2020) investigated the possibility to identify industrial smoke plumes from Sentinel-2 multiband images with promising results. Our trained models reliable distinguish between natural clouds and smoke plumes. We are currently extending this study to provide emission estimates from remote sensing data on a global scale.

We are also interested in topics that are related to climate change science, including the study of its effects on urbanization, ecology, and the economy, as well as providing assistance in disaster relief.

Human Health Hazards

Pollution adversely affects human health as well as our environment. Unfortunately, timely pollution measurements, for instance concentrations of NO2 and other trace gases, are not available on a global scale. By combining remote sensing data with ground-based measurements in a machine learning approach, we are able to estimate pollution levels on the ground where no in-situ data are available.

Self-supervised Learning

Self-supervised learning (SSL) opens an avenue to label-efficient training of deep learning models, by learning rich latent representations from large amounts of unlabeled data. SSL is a natural fit for remote sensing data, which offers almost unlimited amounts of multi-modal and temporally resolved data for such a training process. Our team has shown the usefulness of this approach for Earth observations data.

More information coming soon!

Publications

Our research results have been published at international conference workshops at NeurIPS.

Please find below a list of feature publications from our lab that are related to remote sensing:

- Parameter Efficient Self-Supervised Geospatial Domain Adaptation

- Sparse Multimodal Vision Transformer for Weakly Supervised Semantic Segmentation

- Masked Vision Transformers for Hyperspectral Image Classification

- Self-supervised Vision Transformers for Land-cover Segmentation and Classification

- Contrastive Self-Supervised Data Fusion for Satellite Imagery

- Commercial Vehicle Traffic Detection from Satellite Imagery with Deep Learning

- Estimation of Air Pollution with Remote Sensing Data: Revealing Greenhouse Gas Emissions from Space

- Visualization of Earth Observation Data with the Google Earth Engine

- Power Plant Classification from Remote Imaging with Deep Learning

- A Novel Dataset for the Prediction of Surface NO2 Concentrations from Remote Sensing Data

- Characterization of Industrial Smoke Plumes from Remote Sensing Data

Getting Involved

If you are interested in joining our team as a Bachelor or Master student, there is a good chance that we have a project that suits your skill set and interests. Please have a look here for more information.