Contrastive Self-Supervised Data Fusion for Satellite Imagery

Supervised learning of any task requires large amounts of labeled data. Especially in the case of satellite imagery, unlabeled data is ubiquitous, while the labeling process is often cumbersome and expensive. Therefore, it is highly worthwhile to leverage methods to minimize the amount of labeled data that is required to obtain a good performance of the given down-stream task. In this work, we leverage contrastive learning in a multi-modal setup to achieve this result.

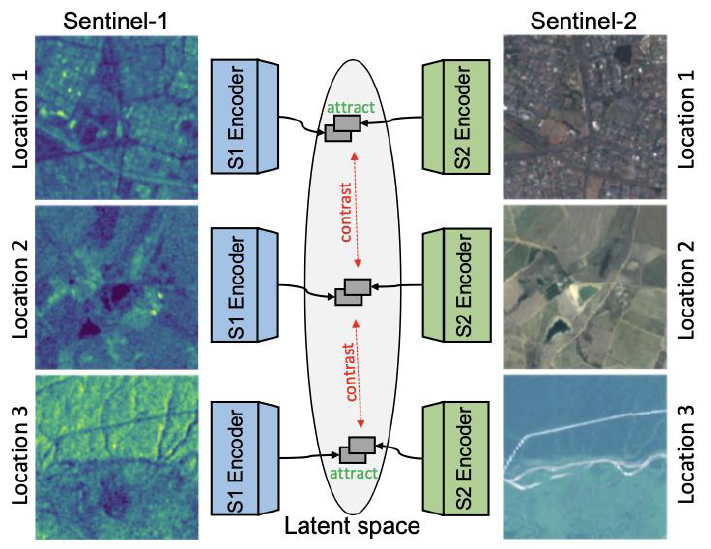

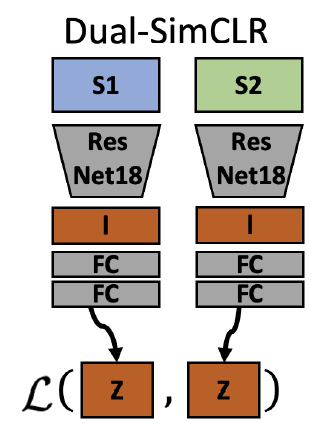

We experiment with different self-supervised learning approached including SimCLR (Chen et al., 2020) and Multi-Modal Alignment (MMA, Windsor et al., 2021) to pretrain our model. Based on SimCLR, we build our own Dual-SimCLR approach, which is depicted above. In all our approaches, we abstain from utilizing data augmentations, which typically is required for contrastive self-supervised learning. Instead, we take advantage of the co-located nature of the data modalities to construct the contrastive power required for the learning process.

For pretraining, we utilize the SEN12MS (Schmitt et al., 2019) dataset, which contains co-located pairs of Sentinel-1 and Sentinel-2 patches, disregarding available labels; for fine-tuning, we utilize the GRSS Data Fusion 2020 dataset, which comes with high-fidelity LULC segmentation labels. The downstream task is learning in a single-label and multi-label approach.

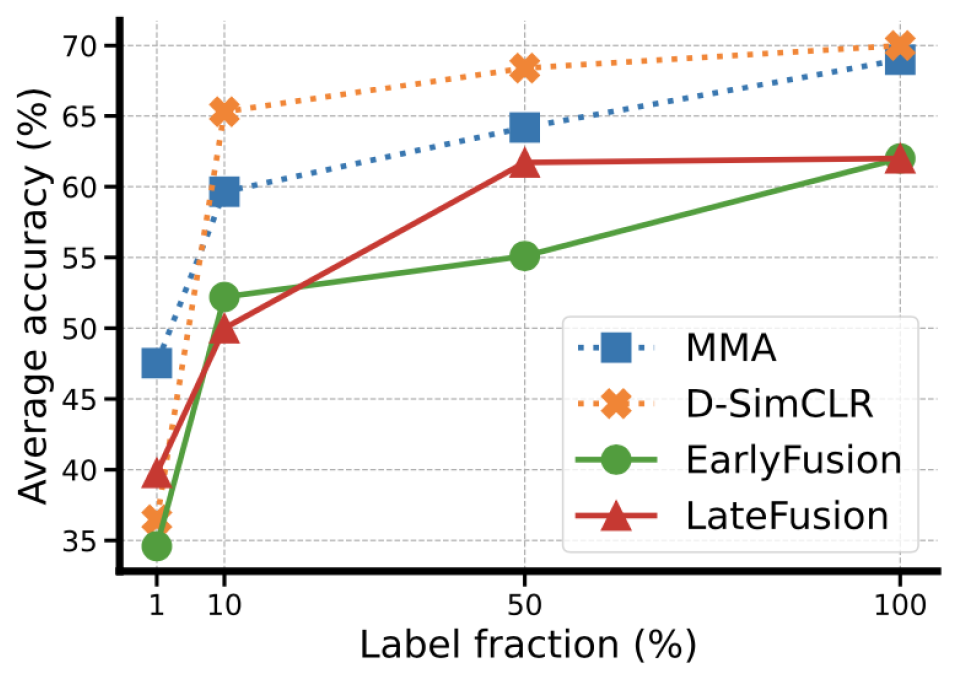

Our results show that especially Dual-SimCLR is very successful in learning rich representations. The results for both single-label und multi-label downstream tasks clearly outperform a range of fully-supervised baselines that utilize single modalities or different data fusion approaches, as well as the other contrastive self-supervised approaches that were tested. We can show that the learned representations are rich and informative.

More importantly, we are able to show the efficiency of pre-training with our approach: by fine-tuning the learned representations of the pretrained backbone, we are able to outperform any fully-supervised baseline approach with only 10% of the labeled data.

To conclude, our approach enables the label-efficient training of deep learning models for remote sensing by pretraining on a large amount of unlabeled data.

The results of this study have been presented at the ISPRS 2022 congress in Nice, France (the results have been presented by Michael Mommert, but the work has been done by Linus Scheibenreif). More results related to this study will be presented at the CVPR 2022 Earthvision workshop. That publication will make the code and pretrained backbone architectures available for both works. Stay tuned!