Fine-grained Emotional Control of Text-To-Speech Learning To Rank Inter- And Intra-Class Emotion Intensities

The goal is to develop a fine-grained emotional Text-To-Speech (TTS) model that allows control over the emotional intensity of each word or phoneme. This level of control can express different attitudes or intentions, even with the same emotion. However, it is nearly impossible to label intensity variations over time, so it’s necessary to learn effective emotion intensity representations without labels.

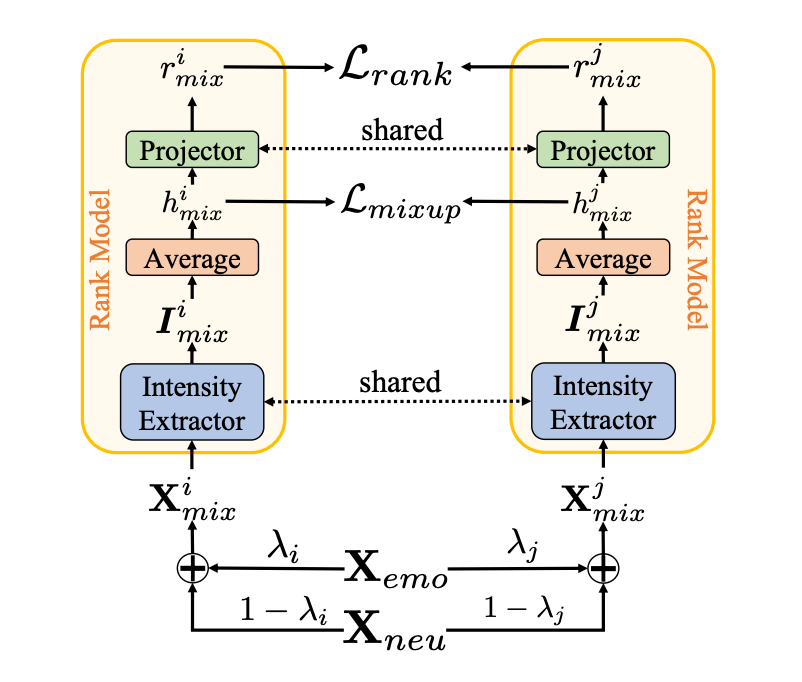

We first train an Intensity Extractor to provide intensity representaions. The Extractor is built on a novel Rank model, which is straightforward yet efficient in extracting emotion intensity information, and both inter- and intra-distance are considered. The ranking is performed on two samples augmented by Mixup (“Mixup: Beyond Empirical Risk Minimization”, ICLR 2017). Each augmented sample is a mix of the same non-neutral and neutral speech. By applying different weights to non-neutral and neutral speech, one mixture contains more non-neutral components than the other. In other words, the non-neutral intensity of one mixture is stronger than that of the other. By learning to rank these two mixtures, the Rank model must not only determine the emotion class (inter-class distance) but also capture the amount of non-neutral emotion present in mixed speech, i.e., the intensity of non-neutral emotion (intra-class distance).

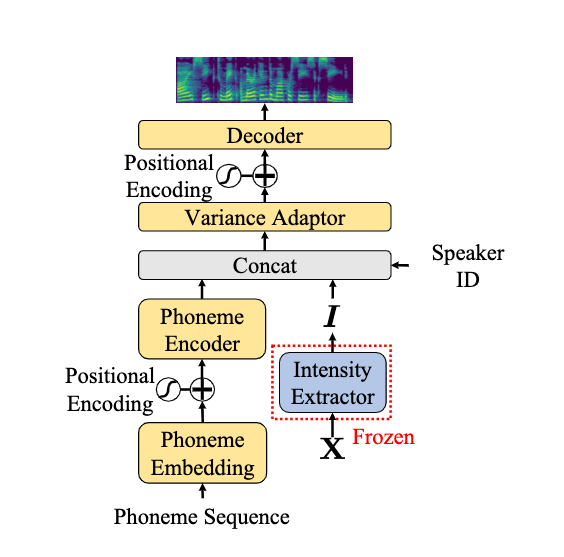

With a pre-trained Intensity Extractor, we then train a FastSpeech 2 TTS model (“FastSpeech 2: Fast and High-Quality End-to-End Text to Speech”, ICLR 2020). The architecture remains the same as in the original paper, but in this instance, the Intensity Extractor is incorporated to supply conditional intensity information.

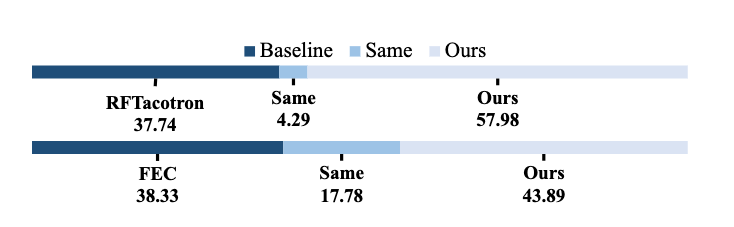

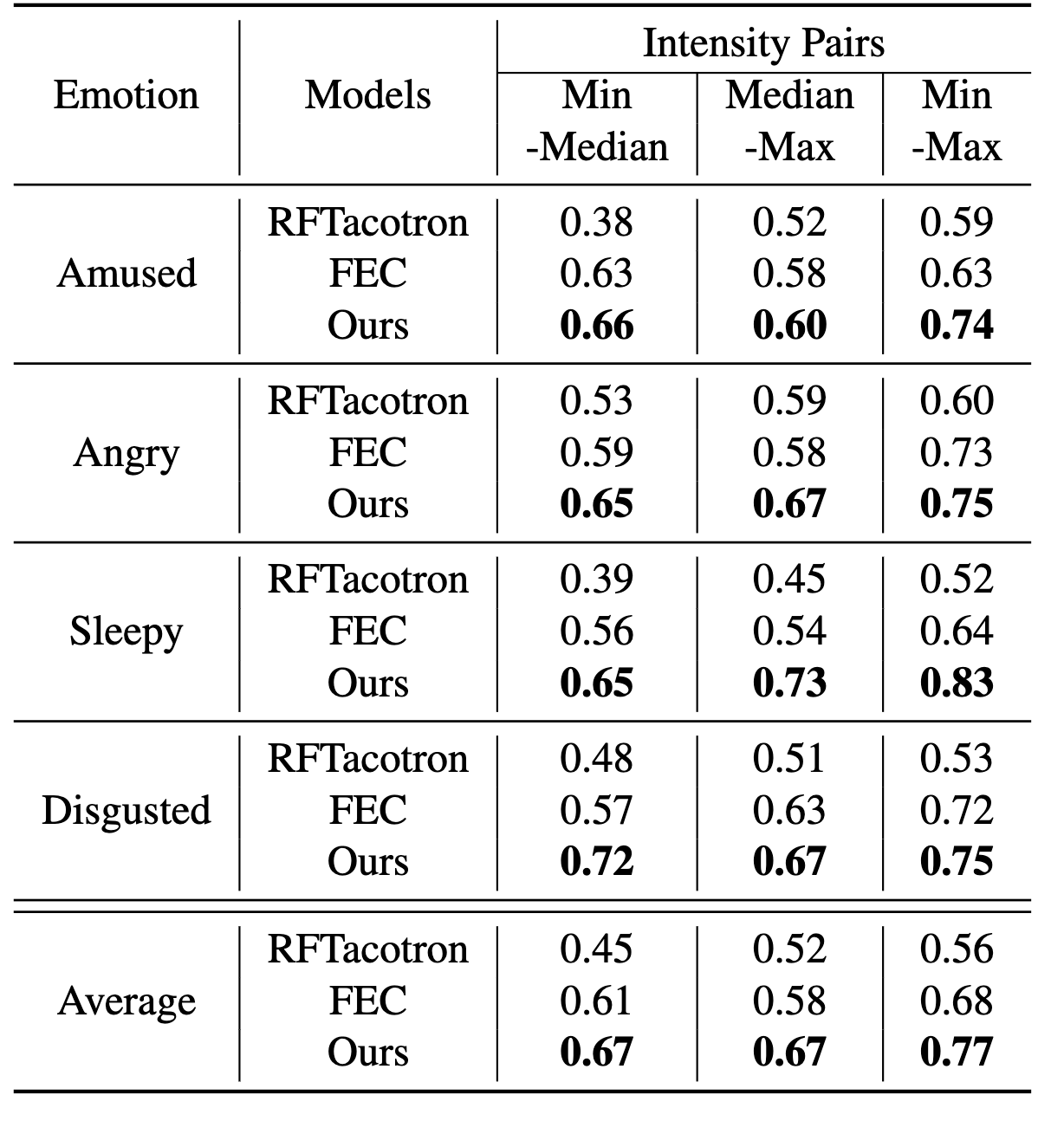

The first subjective experiment shows that when the spoken content is the same, listeners find it easier to discern differences in intensity in the speech synthesized by our model.

Another subjective experiment is the A/B preference test on emotion expressiveness. The results indicate that listeners tend to perceive the expression of emotion as clearer in the speech synthesized by our model.

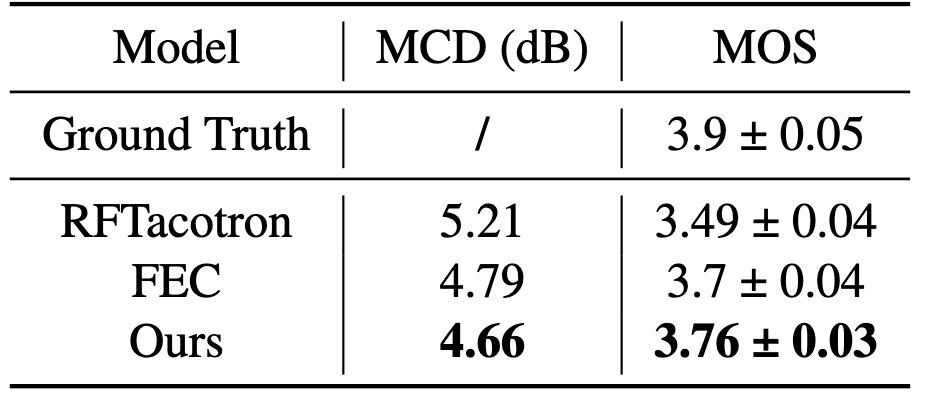

Finally, Mean Cepstral Distortion (MCD) and Naturalness Mean Opinion Score (MOS) evaluations are conducted on all synthesized samples. The results show that the quality and naturalness of the speech synthesized by our model surpass all baseline models.

To conclude, we propose a fine-grained controllable emotional TTS, based on a novel Rank model. The Rank model captures both inter- and intra-class distance information, and thus is able to produce meaningful intensity representations. We conduct subjective and objective tests to evaluate our model, the experimental results show that our model surpasses two state-of-the-art baselines in intensity controllability, emotion expressiveness and naturalness.

The results of this study have been presented at the ICASSP 2023 in Rhodes, Greece.